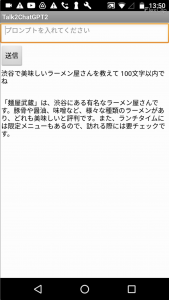

App Inventor版 ChatGPTと話そう!(その2): (その1)に機能追加

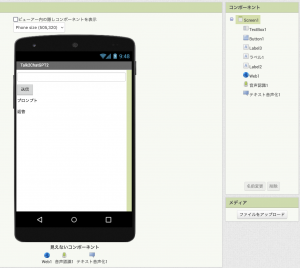

GPT-4を使ってApp InventorアプリのチュートリアルページからiPhoneアプリを作ることを試み、既に5種類のiPhoneアプリを作ることに成功しました。しかし、一々チュートリアルページを作るのは面倒なので、App Inventorアプリの情報をチュートリアルページを使う以外でGPT-4に渡すことができないかと検討していましたが、やっと見つかりました。ここではApp Inventor版「 ChatGPTと話そう!(その1)」 に音声入力・出力機能を付け加えて、「ChatGPTと話そう!(その2)」を作ります。ただし英語でGPT-4プロンプトを入力しないとまともな返答をしてくれませんでした。

前準備

- App Inventor日本語版でApp Inventor版 ChatGPTと話そう!(その1)プロジェクトを選択してプロジェクトメニューから「選択したプロジェクトをローカルコンピュータにエクスポート(.aia)」を実行し、ローカルコンピュータに.aiaファイルをダウンロードします。

- ローカルコンピュータでダウンロードしたファイルの.aiaを.zipに変更し、このファイルを解凍します。

- フォルダーの中にScreen1.bky, Screen1.scm, project.propertiesの三つのファイルがあります。これらをGPT-4に送り込みます。

GPT-4とのやり取り

プロンプト

ソースコードは長いので省略しますが実際はファイル名の後にコピペしています。

Do you understand what the App Inventor project files doing below?

project.properties:

Screen1.scm:

Screen1.bky:

返答

These are MIT App Inventor project files which define an app that interacts with the OpenAI GPT-3 model to provide a chat interface. Let’s break down what each file is doing:

project.properties: This file contains metadata about the project, such as the app name, version, primary and accent colors, location usage, etc. It also defines the main screen of the application and the location of source files, assets, and build files.Screen1.scm: This file describes the components of Screen1 in the form of a JSON object. It specifies the UI components and their properties such as Text Box, Button, Labels, and Web components.Screen1.bky: This file is written in Blockly, a visual programming language developed by Google. It defines the functionality of the application. Here’s a high-level overview of what this file is doing:

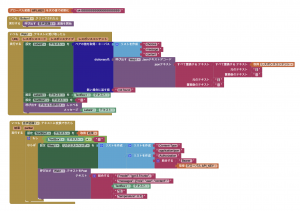

- The app takes input text from

TextBox1.- When

Button1is clicked, if the input text is not empty, a web request is made to the OpenAI API. The headers for this request are set to include the appropriateContent-TypeandAuthorization(with a global API key). The body of the POST request is formed as per the OpenAI API specifications, including the input text as the message from the user to the GPT-3 model.- When the response from the API (

Web1.GotTextevent) is received, it is decoded from JSON and processed. Thecontentfield is extracted from thechoicesarray, which contains the GPT-3 model’s generated response. This response text is then displayed inLabel2. The original input text is also displayed inLabel3, andTextBox1is cleared.Please note that these are general interpretations based on the information provided. The precise behavior of the app might vary and is subject to the entire codebase and the runtime environment.

正しく理解しているようなので、音声入力・出力機能を追加してもらいます。

プロンプト

Can you add voice input and voice output functions and generate new project.propertie, Screen1.scm, and Screen1.bky?

返答

To add voice input and output functionality, we will need to add the SpeechRecognizer and TextToSpeech components to our App Inventor project. I will include them in the

Screen1.scmfile.As for the

Screen1.bkyfile, you will need to modify your button click event to use theSpeechRecognizer.GetText()method, and in the event where theSpeechRecognizer.AfterGettingText(), send that text to the Web API. Also, we will handle theWeb.GotText()event to extract the response, and feed that into theTextToSpeech.Speak()method.Note that the API key and other critical values in the Blockly file are placeholders and should be replaced with the actual values.

Here is what your modified files might look like:

project.properties:

#

#Wed Jun 07 18:19:49 UTC 2023

source=../src

name=Text2ChatGPTWorking

defaultfilescope=App

main=appinventor.ai_tam0130.Text2ChatGPTWorking.Screen1

color.accent=&HFFFF4081

sizing=Responsive

assets=../assets

theme=Classic

showlistsasjson=True

useslocation=False

aname=Text2ChatGPT

actionbar=False

color.primary=&HFF3F51B5

build=../build

versionname=1.0

versioncode=1

color.primary.dark=&HFF303F9FScreen1.scm:

#|

$JSON

{"authURL":["ai2.tmsoftwareinc.com"],"YaVersion":"221","Source":"Form","Properties":{"$Name":"Screen1","$Type":"Form","$Version":"30","AppName":"Text2ChatGPT","Title":"Text2ChatGPT","Uuid":"0","$Components":[

{"$Name":"TextBox1","$Type":"TextBox","$Version":"6","Width":"-2","Hint":"\u30d7\u30ed\u30f3\u30d7\u30c8\u3092\u5165\u308c\u3066\u304f\u3060\u3055\u3044","Uuid":"-1671791960"},

{"$Name":"Button1","$Type":"Button","$Version":"7","Text":"\u9001\u4fe1","Uuid":"2041796963"},

{"$Name":"Label3","$Type":"Label","$Version":"5","Text":"\u30d7\u30ed\u30f3\u30d7\u30c8","Uuid":"1877175895"},

{"$Name":"\u30e9\u30d9\u30eb1","$Type":"Label","$Version":"5","Uuid":"1111020969"},

{"$Name":"Label2","$Type":"Label","$Version":"5","Text":"\u8fd4\u7b54","Uuid":"-1790405606"},

{"$Name":"Web1","$Type":"Web","$Version":"8","Url":"https:\/\/api.openai.com\/v1\/chat\/completions","Uuid":"1099905226"},

{"$Name":"SpeechRecognizer1","$Type":"SpeechRecognizer","$Version":"2","Uuid":"1"},

{"$Name":"TextToSpeech1","$Type":"TextToSpeech","$Version":"5","Uuid":"2"}

]}}

|#Screen1.bky:

This file is too large to show here completely, but here are the key changes you would need to make.

For the

Button1.Clickevent, instead of immediately sending the text from the TextBox1 to the API, you would first callSpeechRecognizer1.GetText(). This will start the speech recognition process.Next, you will need to handle the

SpeechRecognizer1.AfterGettingTextevent. In this event, you would call the same logic that was originally in theButton1.Clickevent, except you would replaceTextBox1.Textwith theresultvariable from theSpeechRecognizer1.AfterGettingTextevent.Finally, in the

Web1.GotTextevent, you would add a line at the end to callTextToSpeech1.Speak(result), whereresultis the final message string you extracted from the JSON response.Please remember to replace the placeholders with actual values. Blockly XML format is intricate, so you might want to use the visual interface of MIT App Inventor to make these changes.

この手順に従ってApp Inventor版 ChatGPTと話そう!(その1)を変更すると問題無く動きました。